In one form or another, C has influenced the shape of almost every programming language developed since the 1980s. Some languages like C++, C#, and objective C are intended to be direct successors to the language, while other languages have merely adopted and adapted C’s syntax. A programmer conversant in Java, PHP, Ruby, Python or Perl will have little difficulty understanding simple C programs, and in that sense, C may be thought of almost as a lingua franca among programmers.

But C did not emerge fully formed out of thin air as some programming monolith. The story of C begins in England, with a colleague of Alan Turing and a program that played checkers.

God Save the King

Christopher Strachey was known as the “person who wrote perfect programs,” as noted in a long profile from the journal, Annals of the History of Computing. It was a reputation he acquired at the Manchester University Computing Center in 1951. Strachey ended up there, working on the school’s Ferranti Mark I computer through an old King’s College, Cambridge, connection, Alan Turing.

Strachey was born in 1916 into a well-connected British family—his uncle, Lytton Strachey, was a founding member of the Bloomsbury Group, while his father, Oliver Strachey, was instrumental in Allied code-breaking activities during both World Wars.

That Strachey ended up being an acknowledged expert in programming and computer science would have come as something of a surprise to his public school and Cambridge University instructors. Strachey had always displayed a talent for the sciences but rarely applied himself.

If he had hopes for a career in academic research, they were dealt a serious blow by an unremarkable performance in his final exams. Instead, Strachey spent World War II working for a British electronics firm and became a schoolteacher afterward, eventually landing at Harrow, one of the most prestigious public schools in London.In 1951 Strachey had his first chance to work with computers when he was introduced to Mike Woodger at Britain’s National Physical Laboratory. After spending a day of his Christmas vacation getting acquainted with the lab’s Pilot ACE, he spent his free time at Harrow figuring out how to teach the computer to play checkers. As Martin Campbell-Kelly, a colleague of Strachey in his later years, put it, “anyone with more experience or less confidence would have settled for a table of squares.”

This first effort didn’t come to fruition; the Pilot ACE simply didn’t have the storage capacity required to play checkers, but it did illustrate an aspect of Strachey’s interest that would prove instrumental in the development of the languages that led to C. At a time when computers were valued chiefly for their ability to quickly solve equations, Strachey was more interested in their ability to perform logical tasks (as he’d later confirm during the 1952 Association for Computing Machinery meeting).

Later that spring he found out about the Ferranti Mark I computer that had been installed at Manchester University, where Alan Turing was assistant director of the computer lab. Turing had written the programmer’s handbook, and Strachey knew him just well enough from their time together at Cambridge to ask him for a copy of the manual.

In July 1951, Strachey had a chance to visit Manchester and discuss his checkers program with Turing in person. Suitably impressed, Turing suggested that, as a first step, he write a program that would enable the Ferranti Mark I to simulate itself. A simulator would allow programmers to see, step by step, how the computer would execute a program. Such a ‘trace’ program would highlight places where the program caused bottlenecks or ran inefficiently. At a time when both computer memory and processor cycles cost a fortune, this was an important aspect of programming.

The trace program Strachey wrote included over a thousand instructions—at the time it was the longest program that had ever been written for the Ferranti Mark I. Strachey had it up and running after pulling an all-nighter, and when the program terminated, it played “God Save the King” on the computer’s speaker, according to Campbell-Kelly.

This accomplishment, by an amateur, caught the attention of Lord Halsbury, managing director of the National Research and Development Corporation, who soon recruited Strachey to spearhead the government’s efforts to promote practical applications of the rapid developments in computer science taking place at British universities.

It was in this capacity that he found out about a project at Cambridge being undertaken by a trio of programmers named David.

David and Goliath Titan

Cambridge University’s computing center had a strong service orientation. The Mathematical Laboratory’s first computers, EDSAC and EDSAC 2, were made available to researchers elsewhere at the university who wrote programs that were punched out on paper tape and fed into the machine.

At the computing center, these paper tapes were clipped to a clothesline and executed one after the other during business hours. This line of pending programs became known as the “job queue,” a term that remains in use to describe far more sophisticated means of organizing computing tasks.

Only two years after EDSAC 2 came online, the university realized that a far more powerful machine would be required soon, and in order to achieve this, they would need to purchase a commercial mainframe. The university considered both the IBM 7090 and the Ferranti Atlas, but it could afford neither of them. In 1961, Peter Hall, a division manager at Ferranti, suggested that they could develop a stripped-down version of the Atlas computer jointly with Cambridge University. Cambridge would get the prototype, dubbed “Titan,” and Ferranti would be able to market the new computer to customers who couldn’t afford the Atlas system.

In order to provide computing services to the rest of the university, this new computer would need both an operating system and at least one high-level programming language.

There was little thought given to expanding the language that had been developed for EDSAC 2. “In the early 1960s, it was common to think, ‘We are building a new computer, so we need a new programming language,’” David Hartley recalled in a 2017 podcast. Along with David Wheeler and David Barron, Hartley would be involved in the early development of this new computer’s programming language.

“The new operating system was inevitable,” according to Hartley, but a new programming language was not. “We thought this was an opportunity to have fun with a new language—which, in hindsight, was a damn stupid thing to do.”

Maurice Wilkes, who was overseeing the Titan project, felt that there was no need for a new programming language. The primary justification for the Titan was providing computational services to the rest of Cambridge University, and for this it would be best if the machine were up and running as quickly as possible and equipped with a language users were already familiar with.

Wilkes required an analysis of available programming languages before approving a proposal to develop a new language. “We chose them very carefully,” Hartley said, “in order to decide that none of them were suitable.” Notably, the working group evaluated Fortran IV without consulting Fortran users at Cambridge who could have explained the additional features included with other varieties of Fortran. Because of this, Hartley recalled the group being convinced that “we could easily define and develop something significantly better,” before noting, “this failing came home to roost in a few years.”

The trio eventually prepared a paper in June 1962 that argued that a new language was necessary, “and we got away with it, too,” Hartley concluded.

This new programming language was dubbed CPL (Cambridge Programming Language), and work was well underway by 1963. The Cambridge programmers had been joined by John Buxton and Eric Nixon, from the University of London, and CPL had been revised to stand for Combined Programming Language. As the project grew, Wilkes decided to bring on Christopher Strachey to oversee the project, and CPL soon came to mean “Christopher’s Programming Language” for those associated with it, according to Campbell-Kelly.

The group of researchers working on the language would meet at Cambridge or in London, sometimes at the University of London, but on other occasions in the artist’s studio at the Kensington townhouse Strachey shared with his sister. The room at the rear of the home was furnished with Victorian chairs and cushions on the floor, while the walls were decorated with portraits of various Bloomsbury Group members painted by one of Strachey’s relatives. This was where Strachey would “hold court,” occasionally in a dressing gown, and as David Barron recalled some years later, “we would argue the world to rights before dispersing to our various homes in the evening.”

By then, David Wheeler had moved on to other projects, leaving a team of five behind: Hartley, Barron, Buxton, Nixon, and Strachey.

Hartley enjoyed working on CPL; “this was actually quite a fun job,” he recalled. Meetings were rather informal affairs. “We’d get very heated about things and eventually start throwing paper darts [airplanes] at one another.”

The group started off with the specifications of ALGOL 60, with the goal of writing a “perfect” language: one that would be practical for a variety of users but also aesthetically satisfying and efficient.

Almost immediately, they had some difficulty prioritizing, as David Barron noted of Strachey, “It was characteristic of him to insist on minor points of difference with the same force that he insisted on major points.” One minor quibble was Strachey’s objection to the grammar of “IF … THEN … ELSE” statements. “I cannot allow my name to be associated with a definite recommendation to use ignorantly incorrect English,” was his view, as Hartley later wrote for Annals of the History of Computing. Strachey preferred “OR,” which conflicted with the way “OR” was used in nearly every other programming language in existence. Nonetheless, his preferences carried the day, and the CPL reference manual included “OR” in the place where users would have expected “ELSE.”

Valuable time was also invested in developing a way to avoid using the asterisk to indicate multiplication. Here, aesthetic concerns led to complications that delayed the implementation of a usable programming language, as complicated rules had to be developed to distinguish between “3a” meaning “3 * a” and “3a” as the name of a variable.

All the while, Cambridge users were growing increasingly frustrated with the lack of a usable programming language for the university’s new Atlas computer. The specifications of the language were largely finished, but there was no compiler available. The working group had made CPL so complicated that early attempts at writing a compiler resulted in machine code that was incredibly inefficient.

From the bootstraps

In 1965, Strachey left for a few month’s stay at MIT, and on his return, he took up a position as the director of Oxford’s Programming Research Group. Meanwhile, back in Cambridge, Martin Richards had joined the CPL project. He set to work developing a limited version of CPL that could be made available to users. This “BCPL,” the ‘B’ standing for “Basic” would need to have an effective compiler.

While at MIT, Strachey helped arrange a two-year sabbatical at that institution for Martin Richards, and in 1966, Richards brought BCPL with him to Massachusetts, where he was able to work on the BCPL compiler.

BCPL is a “bootstrap” language because its compiler is capable of self-compiling. Essentially, a small chunk of the BCPL compiler was written in assembly or machine code, and the rest of the compiler would be written in a corresponding subset of BCPL. The section of the compiler written in BCPL would be fed into the section written in assembly code, and the resultant compiler program could be used to compile any program written in BCPL.

Bootstrapping compilers dramatically simplifies the process of porting a language from one computer or operating system to another. Only the relatively small portion of the compiler written in the code that is specific to that computer needs to be changed to enable the compiler to run on another computer.

While Richards was working on the BCPL compiler at MIT, the institute was engaged in the Multics project with Bell Labs and GE. To support this project a network tying MIT and Bell Labs had been set up.

Although the network connection between the two locations was nominally intended to facilitate work on Multics, Ken Thompson told Ars that it was socially acceptable to “prowl around” the MIT mainframes looking at other projects, and this was how he found both the code and documentation for BCPL. He downloaded it to a Bell Labs mainframe and began working with it. “I was looking for a language to do the kind of stuff that I wanted to do,” Thompson relates. “Programming was very hard in that era of proprietary big-iron operating systems.”

Around the time that Thompson was experimenting with BCPL, Bell Labs pulled out of the Multics consortium, and the computer science department where Thompson worked was temporarily without computers of any sort.

When they did finally obtain a computer, it was a hand-me-down Digital PDP-7 that wasn’t particularly powerful even by the standards of that era. Nevertheless, Thompson was able to get the first version of Unix up and running on that machine.

The PDP-7 had 8,192 “words” of memory (a word in this instance was 18 bits—the industry had not yet standardized on the 8-bit ‘byte’). Unix took up the first 4k, leaving 4k free for running programs.

Thompson took his copy of BCPL—which was CPL with reduced functionality—and further compressed it so that it would fit into the available 4k of memory on the PDP-7. In the course of doing this, he borrowed from a language he had encountered while a student at the University of California, Berkeley. That language, “SMALGOL,” was a subset of ALGOL 60 designed to run on less powerful computers.

The language that Thompson eventually ended up using on the PDP-7 was, as he described it to Ars, “BCPL semantics with a lot of SMALGOL syntax,” meaning that it looked like SMALGOL and worked like BCPL. Because this new language consisted only of the aspects of BCPL that Thompson found most useful and that could be fit into the rather cramped PDP-7, he decided to shorten the name “BCPL” to just “B.”

Earlier, Thompson had written Unix for the PDP-7 in assembler, but even in 1969 this was not an ideal way to write an operating system. It worked for the PDP-7 because that computer was fairly simple and because Thompson wrote the operating system primarily for his own amusement.

Any operating system that would be maintained and updated by multiple programmers and would run on the more sophisticated hardware available at the time would need to be written in a high-level programming language.

Thus, when Bell Labs purchased a PDP-11 for the department in 1971, Thompson decided it was time to rewrite Unix in a high-level programming language, as he told Brian Kernighan on stage at VCF 2019.

At the same time, Dennis Ritchie had adopted B and was adapting it to run on more powerful computers. One of the first things that he added back into B was the ability to “type” variables. Because the PDP-7 had a memory composed of 18-bit words, B could be streamlined by treating every variable as either a single word of memory or as a succession of words that were referenced by their location in the system memory. There were no fixed points or floating decimals, integers or strings. “It was all words,” according to Thompson.

Such an approach was effective on a simple machine with very little memory and a small user base, but on more complex systems, with more complex programs and many users, this could lead to idiosyncratic means of determining whether a variable was a string or a number, as well as inefficient memory usage.

Ritchie dubbed this modified language NB for “New B,” according to Thomas J. Bergin and Richard G. Gibson’s History of Programming Languages, 2nd Ed. It was installed on the mainframes in the computing center at Murray Hill, which made it available to users throughout Bell Labs.

Naturally, when Thompson decided to rewrite Unix in a high-level language, he started with NB. His first three tries ended in failure and, “being an egotist, I blamed it on the language,” Thompson recalled at VCF with a chuckle.

With each failure, Ritchie added features back into NB in a manner that made sense to him and Ken, and once he added structures—variables that store multiple distinct values in a related or ‘structured’ fashion—Thompson was able to write Unix in this new language. Ritchie and Thompson saw the addition of structures, which could be found nowhere in B, SMALGOL, BCPL or CPL, as a change significant enough to warrant renaming the programming language, and B became C.

Life in the key of C

C escaped Bell Labs in much the same way that Unix did. It helped that the PDP-11 rapidly became one of the most successful minicomputers on the market, but the cost of Unix was what made it especially attractive to universities. Any computing center with a PDP-11 could install Unix on it for nothing more than the cost of media and postage, and C came along with Unix.

The presence of C on so many university campuses was, Thompson believes, a major reason for its success. In an email, he told Ars that there were “lots of C-savvy kids going out into the world.”

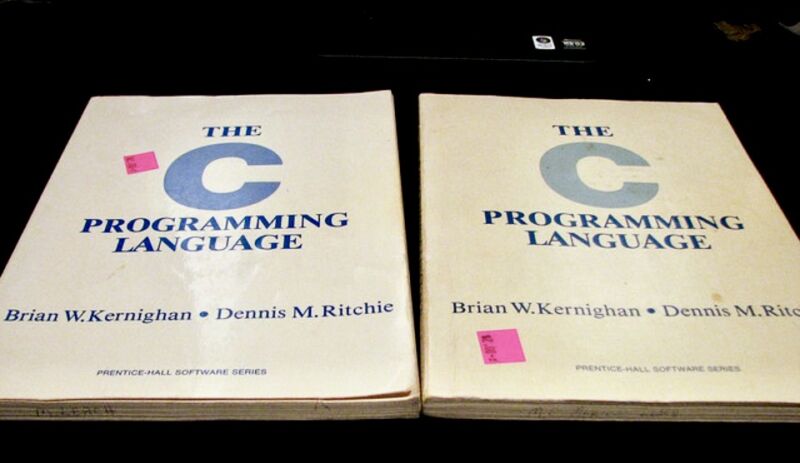

A further boost to the success of C came with the publication, in 1978, of The C Programming Language by Dennis Ritchie and Brian Kernighan. The book, a slim 228 page volume, was, even for the time, a remarkably simple and accessible work.

Widespread familiarity with C’s syntax influenced the development of many subsequent languages that bear little—if any—resemblance to C under the surface. Scripting languages such as PHP and JavaScript contain bits of programming shorthand that Thompson originally developed in order to fit B into the limited memory of the PDP-7. Two examples are the “++” and “--” increment and decrement operators. With only 4k to play around with, shortening “x=x+1” to “x++” saved a not inconsiderable amount of space.

Thompson never believed that C would become as widespread as it did. “There were a lot of very different, very interesting, and very useful languages floating around,” Thompson told Ars. “My sense of aesthetics told me that one language could not cover the universe.”

Like Unix, C was a success born of failure. In both instances, programmers took the best parts of projects that were doomed because they sought to do too much. Multics, which spawned Unix, was—at its peak—only used on about 80 computers worldwide, and CPL, which ultimately led to C, was abandoned by Cambridge researchers in 1967 without being completed.

When Christopher Strachey started the Programming Research Group at Oxford, he said, “the separation of practical and theoretical work is artificial and injurious.” And while CPL was intended to combine practical and theoretical concerns, in execution, its focus was too theoretical. The working group designing CPL was not trying to program in CPL.

But, if Strachey was unable to achieve the synthesis between theory and practicality with CPL, he definitely had the right attitude. “C was written to write Unix,” recalls Thompson, and “Unix was written for all of us to write programs.”

Richard Jensen is a writer and historic preservation specialist in Sioux Falls, South Dakota. Jensen has previously contributed to Ars on subjects like nationwide "right to repair" efforts and the history of Unix. He has more library cards than credit cards, and no one is surprised by this.

"stupid" - Google News

December 09, 2020 at 07:30PM

https://ift.tt/3qQPRHI

“A damn stupid thing to do”—the origins of C - Ars Technica

"stupid" - Google News

https://ift.tt/3b2JB6q

https://ift.tt/3febf3M

Bagikan Berita Ini

0 Response to "“A damn stupid thing to do”—the origins of C - Ars Technica"

Post a Comment